I am currently Senior Principal Software Engineer at Medtronic – Digital Surgery in London.

2023-now - Senior Principal Software Engineer

I lead the development of real-time AI and video processing pipelines for both robotic-assisted and conventional laparoscopic surgery. My work focuses on optimizing and deploying deep learning models on edge devices with NVIDIA GPUs to ensure reliable performance in the operating room. I work closely with a multidisciplinary team of machine learning researchers, embedded engineers, and designers to deliver high-performance, secure, and reliable software for next-generation medical devices.

2019-2023 - Senior Robotics Engineer, Autonomous Navigation

At Arrival, I developed software for autonomous navigation and simulation of mobile robots deployed in microfactories. I was responsible for the vision system of the Autonomous Mobile Robot fleet, including automated camera calibration and validation tools, achieving high-precision positioning and robust fleet operations.

2017-2019 - Autonomous Driving Engineer

In September 2017, I joined Italdesign as Autonomous Driving Engineer. My primary task was to research, implement and deploy perception, mapping/localization and control algorithms for autonomous vehicles. I worked on several projects, such as:

- Pop.Up Next in collaboration with Airbus and Audi: an electric, modular and autonomous flying car.

- Wheem-i, before known as Moby: the first mobility service designed for wheelchair users.

- InTo: a system for measuring the flow of passengers with LEDs that reveal which railcars are the least crowded for boarding.

- Roborace: machine learning research and development project to push the limit of the robocar DevBot 2.0.

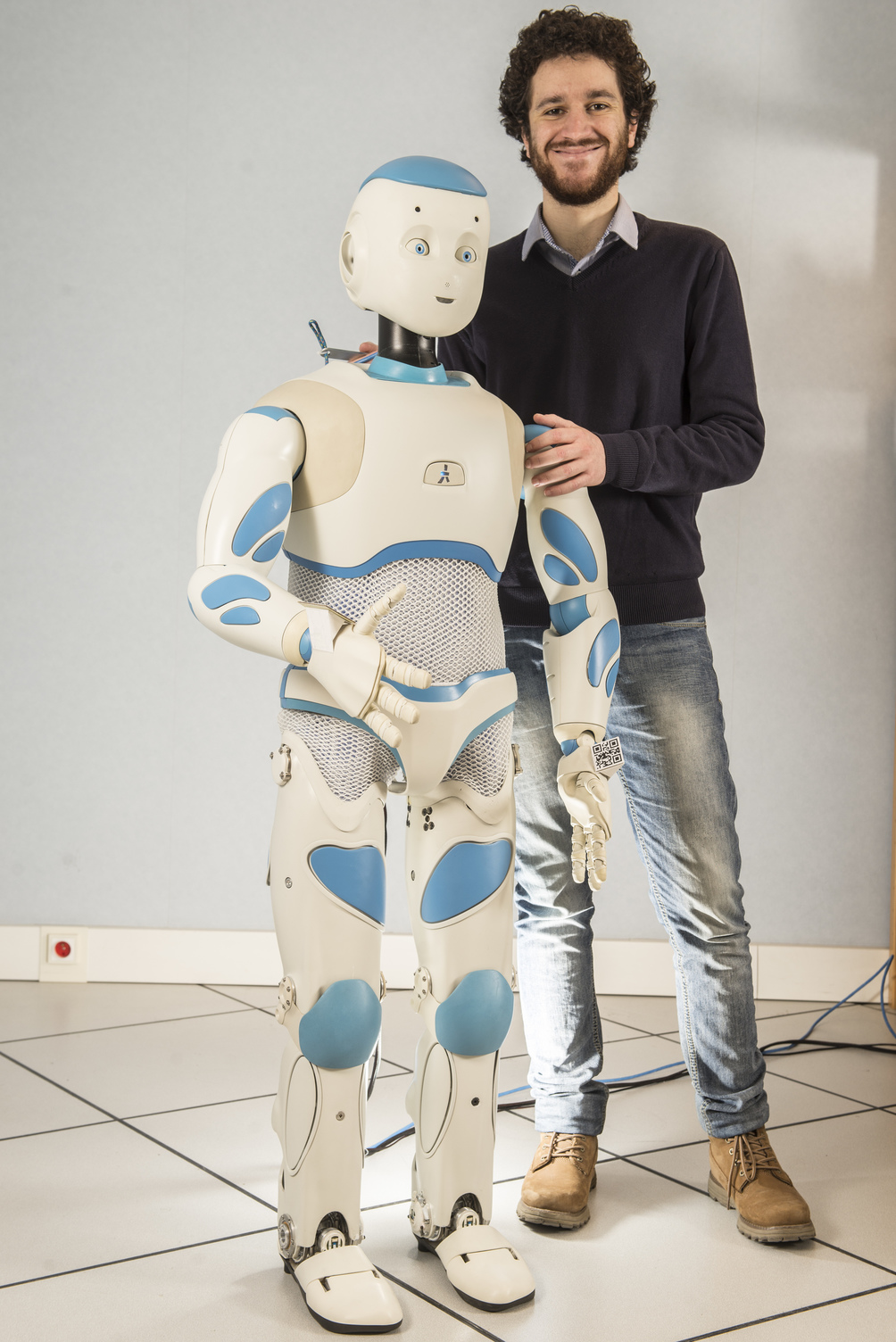

2013-2017 - R&D Robotics Engineer

In November 2013 I joined the team Lagadic in INRIA Rennes led by François Chaumette with the role of R&D Robotics Engineer. My goal was to make robots smarter, helping them to perceive and understand our world and to take action autonomously.

I worked on real-time detection, pose estimation and tracking and I implemented state-of-the-art visual servoing algorithms that significantly improved the robustness and accuracy of several types of robots (mobile, humanoid, industrial robots and drones). To validate these approaches, I created numerous demonstrations using 2D and RGB-D cameras, radars and microphones.

I also worked on improving the perception and motion of the humanoid robots Romeo and Pepper. These robots can now track a target with their gaze, detect and follow a person, detect and grasp objects, deliver them to a human, manipulate them using two hands simultaneously and open a door.

I have contributed to the development of a framework based on ROS, MATLAB/Simulink, and V-REP, for a fast prototyping of robot control algorithms. This system allows testing sensor-based control algorithms before on simulated robots in V-REP and later on the real robots, with a few changes.

In the last years, I supervised several student internships and I am participating as a mentor in the Google Summer of Code. I also published scientific articles at IEEE Robotics and Automation Letters (RA-L), ICRA’17 and Humanoids’16.

My background

I graduated in Computer Engineering in Genoa (Italy) and later I obtained a double degree: Master in Robotics Engineering (University of Genoa) and Master ARIA in Advanced Robotics (École Centrale de Nantes). I completed an internship at IRCCYN (now LS2N) in Nantes, under the supervision of Philippe Martinet, focusing on high-speed robot control using computer vision. My thesis aimed to develop a method for estimating the pose and velocity of a high-speed parallel robot at very high frequencies (1–2 kHz), leveraging a vision-based system. This work was part of the French ANR Project ARROW, which seeks to design accurate and fast robots with large operational workspaces.